Facial recognition technology — which is used by police departments to identify suspects and find missing children — is under attack from the Left because of its alleged racial biases. Last week, five Democratic senators, including Elizabeth Warren, Bernie Sanders, and Ed Markey, and three representatives, including Ayanna Pressley and Rashida Tlaib, reintroduced legislation that would ban the federal government from using the technology.

“We cannot allow racist, faulty facial recognition technology to continue to be used in the surveillance and criminalization of Black and Brown communities,” said Tlaib in announcing the legislation.

The other lawmakers echoed this language, with Sanders saying that the technology “deepens racial bias in policing.” Pressley added that the technology is “flawed and systemically biased” and that it has “exacerbated the criminalization and over-surveillance that Black and brown communities face.”

The falsehood that facial recognition technology is racist has been echoed everywhere in progressive circles and has already resulted in the substantial curtailment of the technology.

Following the death of George Floyd, major developers of the technology shifted course in the face of public pressure from those alleging facial recognition is racially biased. IBM pledged to stop developing facial recognition technology altogether in pursuit of racial justice. Microsoft announced that it would withhold its technology from police departments until more federal regulations were passed. And Amazon announced that it would issue a moratorium on police use of its facial recognition technology.

Many cities followed along and banned facial recognition technology on the premise that it is racist, systematically biased, and advances disparities in policing that adversely harm African Americans. Boston completely banned the use of the technology in June 2020 in the immediate aftermath of the Black Lives Matter protests that rocked the city. And Portland, Oregon, and Portland, Maine, soon followed suit.

Even the UN is on board with the allegation that facial recognition technology is racist. In November 2020, the UN’s Committee on the Elimination of Racial Discrimination concluded that the technology “risk[s] deepening racism and xenophobia.”

Another frequent liberal gripe with facial recognition technology (including from Rep. Alexandria Ocasio-Cortez) is that it often recognizes transgender people as belonging to their biological sex, and it cannot recognize nonbinary, agender, or gender-fluid identities.

For progressives fixated on systemic bias and racial injustice, the contention that facial recognition technology is inherently racist is an attractive one. It extends the ideological position that society is fundamentally racist into modern technology. Look, the line goes, America is so racist that even its computer systems echo that racism.

But despite the progressive meltdown, a 2019 landmark federal study by the National Institute of Standards and Technology found that the most accurate facial recognition technologies “did not display racial bias,” according to Daniel Castro, vice president of the Information Technology and Innovation Foundation.

Castro told The American Spectator that facial recognition technology is thus likely to allow police to identify suspects equally across gender and race.

Mary Haskett, the CEO of Blink Identity, expressed the same opinion as Castro on the results of the federal study.

“The top performing algorithms had no statistically significant demographic bias,” she told The American Spectator. “They found a .002 percent difference between the false positive rate between black females and white males.” (A false positive is when the algorithm incorrectly matches a face to one of the images in a system.)

The federal study also concluded that differences in false positives across races were “undetectable” among the most accurate algorithms when performing what is called a “one-to-many” search. This means that one face is searched for among many images. For example, police would perform this type of search if they were looking to identify a suspect from among a database of images.

But Castro and Haskett have a different interpretation than is the norm. The ACLU, for instance, called the same study “damning” and said it showed that the technology “generally work[s] best on middle-aged white men’s faces, and not so well for people of color, women, children, or the elderly.” The ACLU endorsed the legislation proposed last week to ban facial recognition software and has called it an “anti-Black technology.”

The Washington Post’s response to the same federal study was to write an article headlined, “Federal study confirms racial bias of many facial-recognition systems, casts doubt on their expanding use.”

Castro explained that the reason so many people misinterpreted the federal study was that it examined 200 facial recognition technologies that were submitted to it, and many were of very low quality. When looking at all the facial recognition technologies, including the worst and the best, there were differences in false positives across racial demographics.

“Talking about ‘averages’ or even a ‘majority’ is meaningless,” Castro said in reference to examining the results of the study. “It would be like reviewing the safety of 200 different aircraft, mostly experimental ones from around the world, and using the ‘average’ findings to discuss aircraft safety when most people are flying in Boeing and Airbus aircraft.”

Police would never use the low-quality facial recognition technologies that showed differences across races, Castro explained.

He noted that police do not use facial recognition technology alone as a reason to detain someone. Instead, he explained, it is used as an “investigative tool.” Police would, for instance, use facial recognition software to find the 10 most likely matches from a database and know that these are only leads that could possibly help them to identify the suspect.

Castro stressed that police already use human facial recognition to identify suspects and that humans are actually “less accurate than computers at facial recognition.” (Haskett said that humans are about 70 percent accurate at facial recognition of strangers. Facial recognition technologies can be more than 99 percent accurate.)

“Facial recognition technology offers opportunities to improve accuracy and reduce bias from what exists today in police departments,” Castro said.

Haskett added that using an algorithm for facial recognition would be a lot easier than “changing the bias in the heart and mind of a police officer.”

So if it’s simply not true that facial recognition algorithms are racially biased, then why is there an entire movement on the left, including Bernie Sanders, Elizabeth Warren, and Rashida Tlaib, that wants to outlaw it because of its alleged racism?

It all goes back to a woman named Joy Buolamwini. Buolamwini is a graduate researcher at the Massachusetts Institute of Technology who founded an organization, the Algorithmic Justice League, which aims to make artificial intelligence more “equitable.”

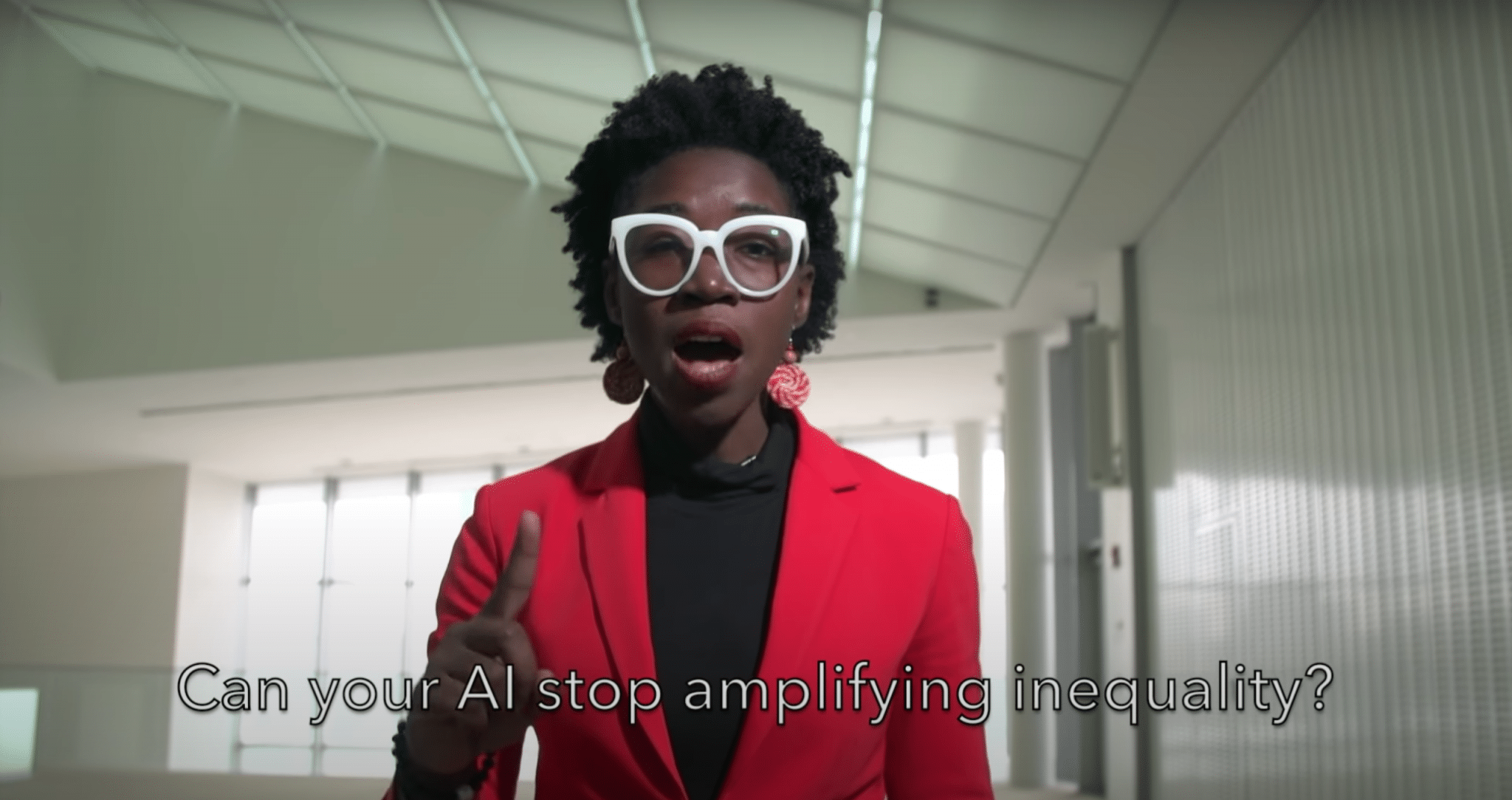

Joy Buolamwini confronts IBM over its alleged biases, March 1, 2019 (Joy Buolamwini/YouTube screenshot)

In 2018, Buolamwini published research concluding that some facial analysis algorithms misclassified black women 35 percent of the time but nearly always got it right with white men. That study won her worldwide acclaim. She was featured in the New York Times, the Guardian, and Time. In addition, a 2020 documentary, Coded Bias, was produced on her work. It is now streaming on Netflix.

The film’s synopsis says that it “explores the fallout of MIT Media Lab researcher Joy Buolamwini’s discovery that facial recognition does not see dark-skinned faces accurately, and her journey to push for the first-ever legislation in the U.S. to govern against bias in the algorithms that impact us all.”

Some have concluded that it was Buolamwini’s work that got IBM, Microsoft, and Amazon to put a stop to their facial recognition technology.

“Joy Buolamwini got Jeff Bezos to back down,” concluded the magazine Fast Company in 2020.

Buolamwini and like-minded advocates have achieved the near shutdown of an entire technology in the United States. But the reason they pushed for this shutdown — the technology’s supposed racial bias — was already proven false in 2019.

The conversation should instead be focused on the actual pertinent issue regarding facial recognition technology: privacy. But it’s impossible to have an honest debate on privacy when the Left is derailing the technology on false premises. When ideology reigns supreme, it seems the facts don’t matter all that much.